Batch Engine API Basics - Importing Data

Liferay’s Headless Batch Engine provides REST APIs to import and export data. Call these services to import data to Liferay.

Importing Data

Start a new Liferay DXP instance by running

docker run -it -m 8g -p 8080:8080 liferay/dxp:2024.q1.1

Sign in to Liferay at http://localhost:8080 using the email address test@liferay.com and the password test. When prompted, change the password to learn.

Then follow these steps:

-

Download and unzip Batch Engine API Basics.

curl https://resources.learn.liferay.com/dxp/latest/en/headless-delivery/consuming-apis/liferay-g4j2.zip -Ounzip liferay-g4j2.zip -

To import data, you must have the fully qualified class name of the entity you are importing. You can get the class name from the API explorer in your installation at

/o/api. Scroll down to the Schemas section and note down thex-class-namefield of the entity you want to import. -

Use the following cURL script to import accounts to your Liferay instance. On the command line, navigate to the

curlfolder. Execute theImportTask_POST_ToInstance.shscript with the fully qualified class name of Account as a parameter../ImportTask_POST_ToInstance.sh com.liferay.headless.admin.user.dto.v1_0.AccountThe JSON response shows the creation of a new import task. Note the

idof the task:{ "className" : "com.liferay.headless.admin.user.dto.v1_0.Account", "contentType" : "JSON", "errorMessage" : "", "executeStatus" : "INITIAL", "externalReferenceCode" : "4a6ab4b0-12cc-e8e3-fc1a-4726ebc09df2", "failedItems" : [ ], "id" : 1234, "importStrategy" : "ON_ERROR_FAIL", "operation" : "CREATE", "processedItemsCount" : 0, "startTime" : "2022-10-19T14:19:43Z", "totalItemsCount" : 0 } -

The current

executeStatusisINITIAL. It denotes the submission of a task to the Batch Engine. You must wait until this isCOMPLETEDto verify the data. On the command line, execute theImportTask_GET_ById.shscript and replace1234with the ID of your import task../ImportTask_GET_ById.sh 1234{ "className" : "com.liferay.headless.admin.user.dto.v1_0.Account", "contentType" : "JSON", "endTime" : "2022-10-19T12:18:59Z", "errorMessage" : "", "executeStatus" : "COMPLETED", "externalReferenceCode" : "7d256faa-9b7e-9589-e85c-3a72f68b8f08", "failedItems" : [ ], "id" : 1234, "importStrategy" : "ON_ERROR_FAIL", "operation" : "CREATE", "processedItemsCount" : 2, "startTime" : "2022-10-19T12:18:58Z", "totalItemsCount" : 2 }If the

executeStatusisCOMPLETED, you can verify the imported data. If not, execute the command again to ensure the task has finished execution. If theexecuteStatusshowsFAILED, check theerrorMessagefield to understand what went wrong. -

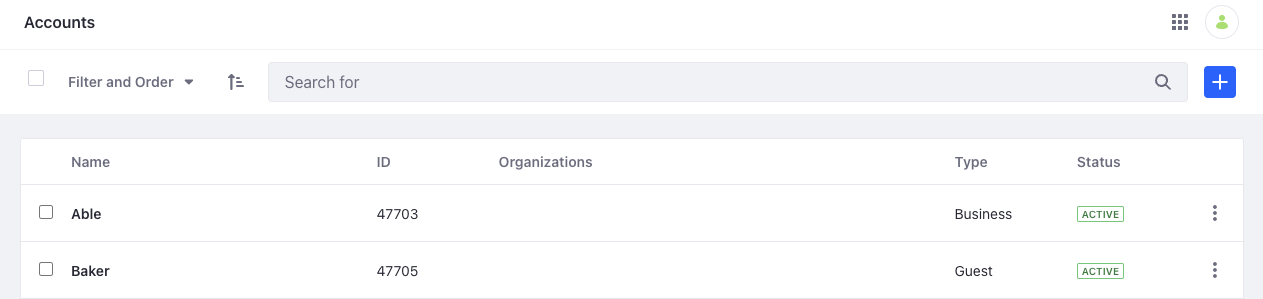

Verify the imported data by opening the Global Menu (

), and navigating to Control Panel → Accounts. See that two new accounts have been added.

), and navigating to Control Panel → Accounts. See that two new accounts have been added.

-

You can also call the The REST service using the Java client. Navigate out of the

curlfolder and into thejavafolder. Compile the source files:javac -classpath .:* *.java -

Run the

ImportTask_POST_ToInstanceclass. Replaceablewith the fully qualified name of the class andbakerwith the JSON data you want to import.java -classpath .:* -DclassName=able -Ddata=baker ImportTask_POST_ToInstanceFor example, import

Accountdata:java -classpath .:* -DclassName=com.liferay.headless.admin.user.dto.v1_0.Account -Ddata="[{\"name\": \"Able\", \"type\": \"business\"}, {\"name\": \"Baker\", \"type\": \"guest\"}]" ImportTask_POST_ToInstanceNote the

idof the import task from the JSON response. -

Run the

ImportTask_GET_ByIdclass. Replace1234with the ID of your import task. Once theexecuteStatusshowsCOMPLETED, you can verify the data as shown in the steps above.java -cp .:* -DimportTaskId=1234 ImportTask_GET_ById

Examine the cURL Command

The ImportTask_POST_ToInstance.sh script calls the REST service using cURL.

curl \

"http://localhost:8080/o/headless-batch-engine/v1.0/import-task/${1}" \

--data-raw '

[

{

"name": "Able",

"type": "business"

},

{

"name": "Baker",

"type": "guest"

}

]' \

--header "Content-Type: application/json" \

--request "POST" \

--user "test@liferay.com:learn"

Here are the command’s arguments:

| Arguments | Description |

|---|---|

-H "Content-Type: application/json" | Indicates that the request body format is JSON. |

-X POST | The HTTP method to invoke at the specified endpoint |

"http://localhost:8080/o/headless-batch-engine/v1.0/import-task/${1}" | The REST service endpoint |

-d "[{\"name\": \"Able\", \"type\": \"business\"}, {\"name\": \"Baker\", \"type\": \"guest\"}]" | The data you are requesting to post |

-u "test@liferay.com:learn" | Basic authentication credentials |

Basic authentication is used here for demonstration purposes. For production, you should authorize users via OAuth2. See Use OAuth2 to authorize users for a sample React application that uses Oauth2.

The other cURL commands use similar JSON arguments.

Examine the Java Class

The ImportTask_POST_ToInstance.java class imports data by calling the Batch Engine related service.

public static void main(String[] args) throws Exception {

ImportTaskResource.Builder builder = ImportTaskResource.builder();

ImportTaskResource importTaskResource = builder.authentication(

"test@liferay.com", "learn"

).build();

System.out.println(

importTaskResource.postImportTask(

String.valueOf(System.getProperty("className")), null, null,

null, null, null, null,

String.valueOf(System.getProperty("data"))));

}

This class invokes the REST service using only three lines of code:

| Line (abbreviated) | Description |

|---|---|

ImportTaskResource.Builder builder = ... | Gets a Builder for generating a ImportTaskResource service instance. |

ImportTaskResource importTaskResource = builder.authentication(...).build(); | Specifies basic authentication and generates a ImportTaskResource service instance. |

importTaskResource.postImportTask(...); | Calls the importTaskResource.postImportTask method and passes the data to post. |

Note that the project includes the com.liferay.headless.batch.engine.client.jar file as a dependency. You can find client JAR dependency information for all REST applications in the API explorer in your installation at /o/api.

The main method’s comment demonstrates running the class.

The other example Java classes are similar to this one, but call different ImportTaskResource methods.

See ImportTaskResource for service details.

Below are examples of calling other Batch Engine import REST services using cURL and Java.

Get the ImportTask Status

You can get the status of an import task by executing the following cURL or Java command. Replace 1234 with the ID of your import task.

ImportTask_GET_ById.sh

Command:

./ImportTask_GET_ById.sh 1234

Code:

curl \

"http://localhost:8080/o/headless-batch-engine/v1.0/import-task/${1}" \

--user "test@liferay.com:learn"

ImportTask_GET_ById.java

Run the ImportTask_GET_ById class. Replace 1234 with the ID of your import task.

Command:

java -classpath .:* -DimportTaskId=1234 ImportTask_GET_ById

Code:

public static void main(String[] args) throws Exception {

ImportTaskResource.Builder builder = ImportTaskResource.builder();

ImportTaskResource importTaskResource = builder.authentication(

"test@liferay.com", "learn"

).build();

System.out.println(

importTaskResource.getImportTask(

Long.valueOf(System.getProperty("importTaskId"))));

}

Importing Data to a Site

You can import data to a site by executing the following cURL or Java command. The example imports blog posts to a site. Find your Site’s ID and replace 1234 with it. When using another entity, you must also update the fully qualified class name parameter and the data to import in the cURL script.

ImportTask_POST_ToSite.sh

Command:

./ImportTask_POST_ToSite.sh com.liferay.headless.delivery.dto.v1_0.BlogPosting 1234

Code:

curl \

"http://localhost:8080/o/headless-batch-engine/v1.0/import-task/${1}?siteId=${2}" \

--data-raw '

[

{

"articleBody": "Foo",

"headline": "Able"

},

{

"articleBody": "Bar",

"headline": "Baker"

}

]' \

--header "Content-Type: application/json" \

--request "POST" \

--user "test@liferay.com:learn"

ImportTask_POST_ToSite.java

Run the ImportTask_POST_ToSite class. Replace 1234 with your site’s ID, able with the fully qualified name of the class, and baker with the JSON data you want to import.

Command:

java -classpath .:* -DsiteId=1234 -DclassName=able -Ddata=baker ImportTask_POST_ToSite

For example, import BlogPosting data:

java -classpath .:* -DsiteId=1234 -DclassName=com.liferay.headless.delivery.dto.v1_0.BlogPosting -Ddata="[{\"articleBody\": \"Foo\", \"headline\": \"Able\"}, {\"articleBody\": \"Bar\", \"headline\": \"Baker\"}]" ImportTask_POST_ToSite

Code:

public static void main(String[] args) throws Exception {

ImportTaskResource.Builder builder = ImportTaskResource.builder();

ImportTaskResource importTaskResource = builder.authentication(

"test@liferay.com", "learn"

).parameter(

"siteId", String.valueOf(System.getProperty("siteId"))

).build();

System.out.println(

importTaskResource.postImportTask(

String.valueOf(System.getProperty("className")), null, null,

null, null, null, null,

String.valueOf(System.getProperty("data"))));

}

The JSON response displays information from the newly created import task. Note the id to keep track of its executeStatus.

Put the Imported Data

You can use the following cURL or Java command to completely overwrite existing data using the Batch Engine. The example shows updating existing account data. When using another entity, you must update the fully qualified class name parameter and the data to overwrite in the cURL script.

ImportTask_PUT_ById.sh

Command:

./ImportTask_PUT_ById.sh com.liferay.headless.admin.user.dto.v1_0.Account

Code:

curl \

"http://localhost:8080/o/headless-batch-engine/v1.0/import-task/${1}" \

--data-raw '

[

{

"id": 1234,

"name": "Bar",

"type": "business"

},

{

"id": 5678,

"name": "Goo",

"type": "guest"

}

]' \

--header "Content-Type: application/json" \

--request "PUT" \

--user "test@liferay.com:learn"

ImportTask_PUT_ById.java

Run the ImportTask_PUT_ById class. Replace able with the fully qualified name of the class, and baker with the JSON data to overwrite what’s there. The data should contain the IDs of the entity you want to overwrite.

Command:

java -classpath .:* -DclassName=able -Ddata=baker ImportTask_PUT_ById

For instance, if you want to overwrite existing Account data, replace 1234 and 5678 with the IDs of the existing Accounts:

java -classpath .:* -DclassName=com.liferay.headless.admin.user.dto.v1_0.Account -Ddata="[{\"id\" :1234, \"name\": \"Bar\", \"type\": \"business\"}, {\"id\": 5678, \"name\": \"Goo\", \"type\": \"guest\"}]" ImportTask_PUT_ById

Code:

public static void main(String[] args) throws Exception {

ImportTaskResource.Builder builder = ImportTaskResource.builder();

ImportTaskResource importTaskResource = builder.authentication(

"test@liferay.com", "learn"

).build();

System.out.println(

importTaskResource.putImportTask(

String.valueOf(System.getProperty("className")), "", "", "", "",

null, String.valueOf(System.getProperty("data"))));

}

Delete the Imported Data

You can use the following cURL or Java command to delete existing data using the Batch Engine. The example deletes account data. When using another entity, you must update the fully qualified class name parameter and also the data to delete in the cURL script.

ImportTask_DELETE_ById.sh

Command:

./ImportTask_DELETE_ById.sh com.liferay.headless.admin.user.dto.v1_0.Account

Code:

curl \

"http://localhost:8080/o/headless-batch-engine/v1.0/import-task/${1}" \

--data-raw '

[

{

"id": 1234

},

{

"id": 5678

}

]' \

--header "Content-Type: application/json" \

--request "DELETE" \

--user "test@liferay.com:learn"

ImportTask_DELETE_ById.java

Run the ImportTask_DELETE_ById class. Replace able with the fully qualified name of the class, and baker with the JSON data to overwrite what’s there. The data should contain the IDs of the entity you want to delete.

Command:

java -classpath .:* -DclassName=able -Ddata=baker ImportTask_DELETE_ById

For instance, if you want to delete Account data, replace 1234 and 5678 with the IDs of the existing accounts:

java -classpath .:* -DclassName=com.liferay.headless.admin.user.dto.v1_0.Account -Ddata="[{\"id\": 1234}, {\"id\": 5678}]" ImportTask_DELETE_ById

Code:

public static void main(String[] args) throws Exception {

ImportTaskResource.Builder builder = ImportTaskResource.builder();

ImportTaskResource importTaskResource = builder.authentication(

"test@liferay.com", "learn"

).build();

System.out.println(

importTaskResource.deleteImportTask(

String.valueOf(System.getProperty("className")), null, null,

null, null, String.valueOf(System.getProperty("data"))));

}

Get Contents of the Imported Data

You can retrieve the data you imported with the following cURL and Java commands. Replace 1234 with the import task’s ID. It is then downloaded as a .zip file in the current directory.

ImportTaskContent_GET_ById.sh

Command:

./ImportTaskContent_GET_ById.sh 1234

Code:

curl \

"http://localhost:8080/o/headless-batch-engine/v1.0/import-task/${1}/content" \

--output file.zip \

--user "test@liferay.com:learn"

ImportTaskContent_GET_ById.java

Command

java -classpath .:* -DimportTaskId=1234 ImportTaskContent_GET_ById

Code:

public static void main(String[] args) throws Exception {

ImportTaskResource.Builder builder = ImportTaskResource.builder();

ImportTaskResource importTaskResource = builder.authentication(

"test@liferay.com", "learn"

).build();

HttpInvoker.HttpResponse httpResponse =

importTaskResource.getImportTaskContentHttpResponse(

Long.valueOf(System.getProperty("importTaskId")));

try (FileOutputStream fileOutputStream = new FileOutputStream(

"file.zip")) {

fileOutputStream.write(httpResponse.getBinaryContent());

}

}

The API Explorer lists all of the Headless Batch Engine services and schemas and has an interface to try out each service.